In the lead-up to his time on the show, we refrained from testing him with trivia, but we did get into some serious debates about wagering strategy. One of the more particular (and specific) scenarios we spent some time on had to do with the right wager to make when the last clue of Double Jeopardy is a Daily Double.

The root of the discussion is what I'm calling the Stegner Conjecture, named for the colleague who was adamant about making a big wager to put the game away, as long as you saw the category being neutral or better. His perspective is that you know more about the Daily Double category than you do the Final Jeopardy question, so you should use that to your advantage to put that game away.

While I understood his logic, I wasn't sure I would necessarily bet by his rules if I was ever placed in that spot. But... that opinion may have changed as I worked through the problem.

So first, let’s establish the scenario. For the sake of simplicity, we focused on the scenario where there will be just two players in the Final Jeopardy round. In this scenario, the two players who will compete in Final Jeopardy are separated by a small amount of money, and the player in the lead gets a Daily Double for the final clue of Double Jeopardy. Assume that this player (P1) has $10,200 and the second player (P2) has $10,000. Now, there are three basic betting scenarios:

- Bet big to put the game away (The Stegner Conjecture) – we’ll fix this amount at $10,000; winning would guarantee that Player 1 will win the match, as they will have more than double the score of P2

- Bet very small – we’ll fix this amount at $0; this means the game is determined by the contestants’ bets and responses in Final Jeopardy

- Wager an amount in the middle – we’ll fix this amount at $5,000; P1 will have $15,2000 or $5,200 for Final Jeopardy

- Right Right: 34%

- Right Wrong: 27%

- Wrong Wrong: 25%

- Wrong Right: 14%

For what it's worth, this degree of variation from an even distribution (25% - 25% - 25% - 25%) does pass a chi-square test for significance (p < 0.05). That said, I also modeled the outcomes using a uniform distribution for comparison purposes.

Now, for each betting scenario, I determined the Final Jeopardy betting strategy that would give each contestant the best chance of winning. In some situations, the player with the lower score's bet would be irrelevant, as there would be no way for the leading player betting rationally to lose.

Based on this analysis, P1 has the following chance of winning for each betting scenario. Note that the first number assumes an even distribution, the second number uses the historical distribution.

Stegner Conjecture

- Right answer on Daily Double: 100% / 100%

- Wrong answer on Daily Double: 0% / 0%

Medium Wager

- Right answer on Daily Double: 75% / 86%

- Wrong answer on Daily Double: 25% / 14%

Low/no Wager: 50% / 61%

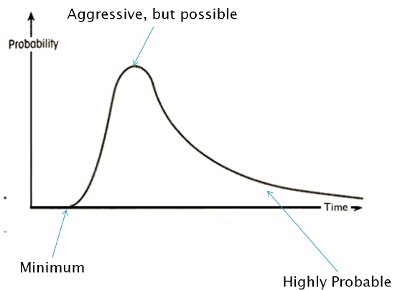

So now, the only task left to do is to assign a probability for P1 answering the Daily Double correctly, and then calculate the final expected outcome for P1 given the different betting scenarios and the Daily Double probability.

|

| Expected Value of game, based on historic Final Jeopardy outcomes |

|

| Expected Value of game, based on uniform distribution of Final Jeopardy outcomes |

Given this, the model suggests that:

- If you go by historic figures, you should bet big if you think you have greater than a 61% chance of getting the Daily Double correct, otherwise you should bet zero

- If you go by an even distribution, you should bet big if you have at least a 50/50 chance, otherwise you should bet zero

- There is no scenario where a medium wager is optimal

So in the end, the Stegner Conjecture holds, although I'll admit that I would find it difficult to make that big of a bet unless I felt significantly more confident than the 50% or 61% suggested above. Part of that is the emotional player winning out over the rational player, but also an indication of the difficulty in ascertaining a quantitative degree of confidence in a situation like above.